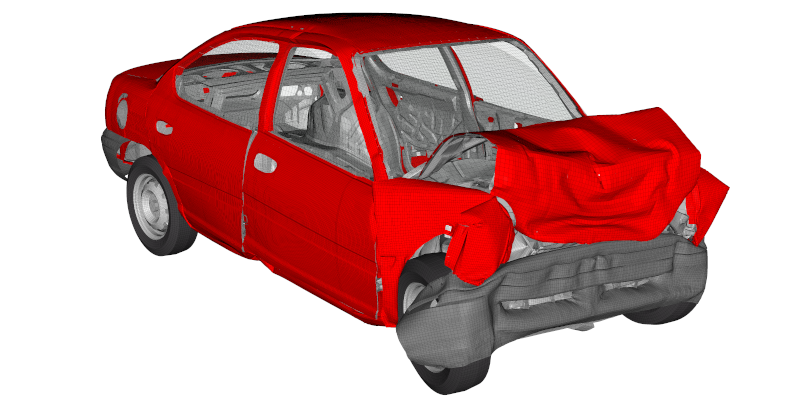

This whitepaper funded by AMD discusses current challenges in CAE and explains how AMD EPYC 7003 series processors with V-Cache technology and HPE Apollo Gen10 Plus servers are an ideal solution for manufacturers.

In today’s hyper-competitive business environment, data is at the heart of everything we do. Business leaders rely on up-to-date data to better understand their customers and competitors, make better, more informed decisions, and support new business initiatives. This paper examines some of the challenges with existing data pipelines and discuss five considerations for building a

COVID-19 was the first pandemic to emerge in the era of modern bioinformatics. Government and private labs were forced to dramatically scale their compute infrastructure for everything from the development of vaccines and therapeutics to genomic surveillance and variant tracking. Seqera Labs was one of the technology companies in the thick of the COVID battle.

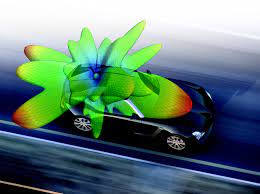

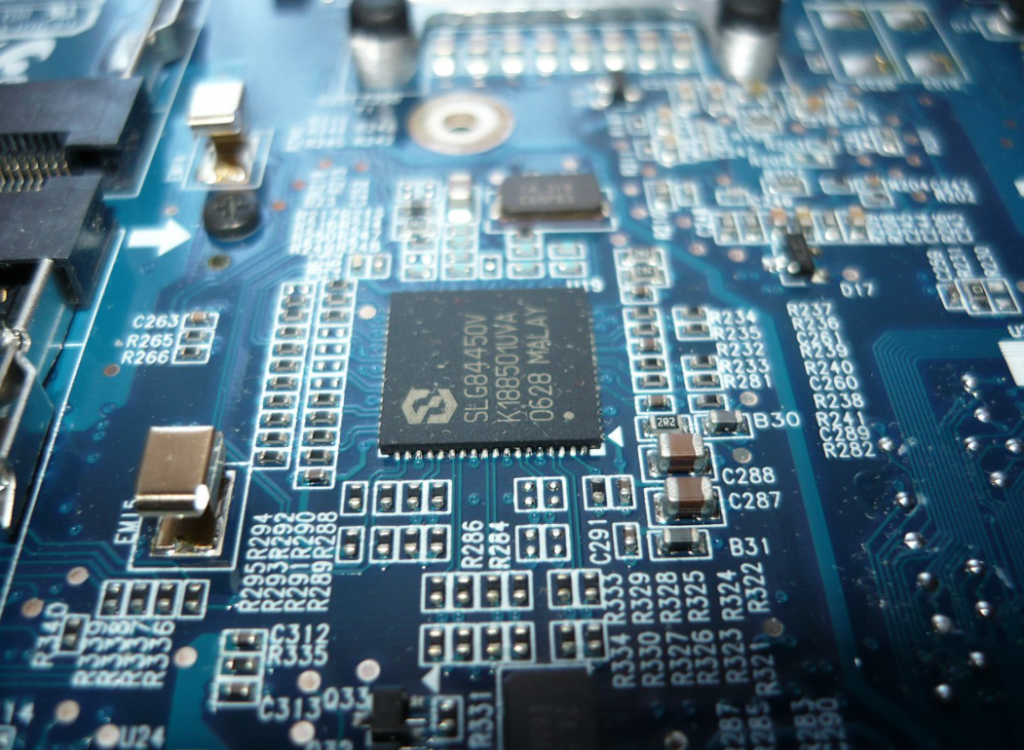

In the age of the internet and IoT, the need for electromagnetic simulation is on the rise. Today’s electronic devices bristle with electronic transmitters and sensors of all kinds. I recently had the opportunity to work with smart people at Altair and AMD to document a series of interesting electromagnetics benchmarks conducted with Altair Feko.

I recently had the opportunity to work with Incorta developing an architecture guide for their Unified Data Analytics Platform. The guide is now live on Incorta’s website. This guide explores the architecture of Incorta’s unified data analytics platform and how it can augment or replace legacy analytics environments while taking a new and innovative approach

Altair Engineering have an impressive portfolio of solutions for electronic design automation. I had recently had the opportunity to develop a technical whitepaper describing products in the Altair Accelerator portfolio. You can View/Download the whitepaper here.

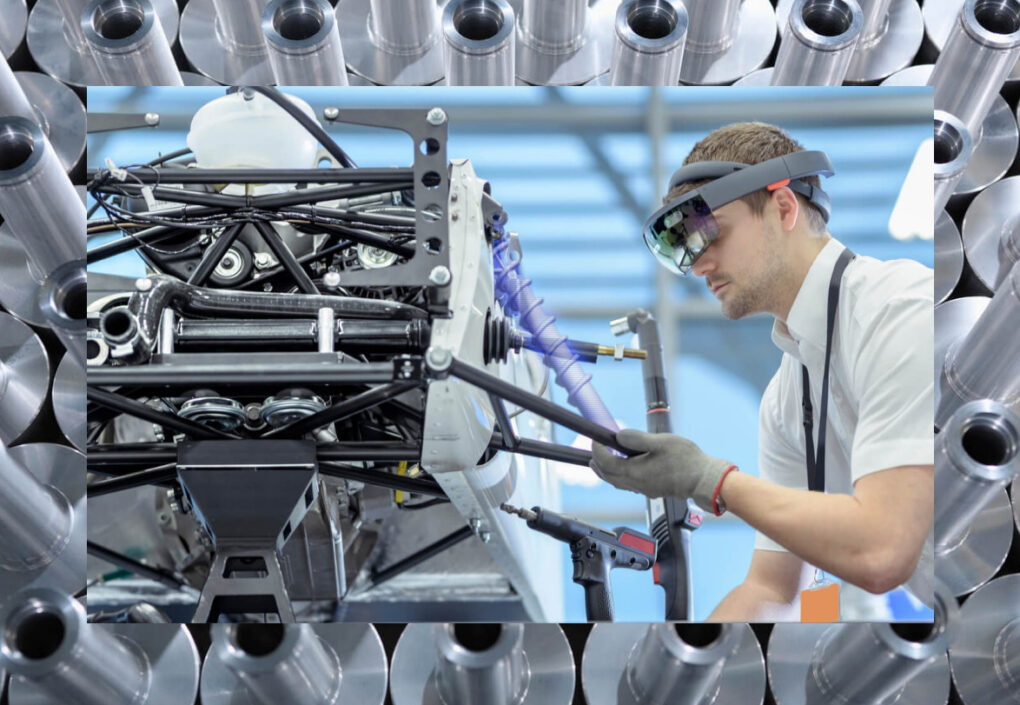

I recently completed work on the attached article for Altair and AMD published by InsideHPC. The combination of Altair structural and CFD solvers and the latest AMD EPYC 7003 series processors deliver industry-leading performance for CAE applications. You can read about the detailed benchmarks results here: Taking Altair Structural & CFD Analysis to New Heights

Managing the trade-off between consistency and availability is nothing new in distributed databases. It’s such a well-known issue that there is a theorem to describe it. While modern databases don’t tend to fall neatly into categories, the CAP theorem (also known as Brewer’s theorem) is still a useful place to start. The CAP theorem says

I recently completed a project working with Altair studying the pros and cons of open-source vs. commercial workload management. Having worked for years in HPC at both Platform Computing and IBM, this topic was right in my wheelhouse. You can download the whitepaper here: A Cost-benefit Look at Open-source vs. Commercial HPC Workload Managers (altair.com) High-performance

When it comes to high-performance computing (HPC), engineers can never get enough performance. Even minor improvements at the chip-level can have a dramatic financial impact in hyper-competitive industries such as computer-aided engineering (CAE) for manufacturing and electronic design automation (EDA). With their respective x86 processor lineups, Intel and AMD continue to battle for bragging rights,