Note: this article was written for IBM for publication in InsideHPC before the widespread popularity of models like ChatGPT, Gemini, and Llama. Today there are several tools that can detect text written by AI including GPTZero, Turnitin AI Writing Detection, and Copyleaks AI Detector.

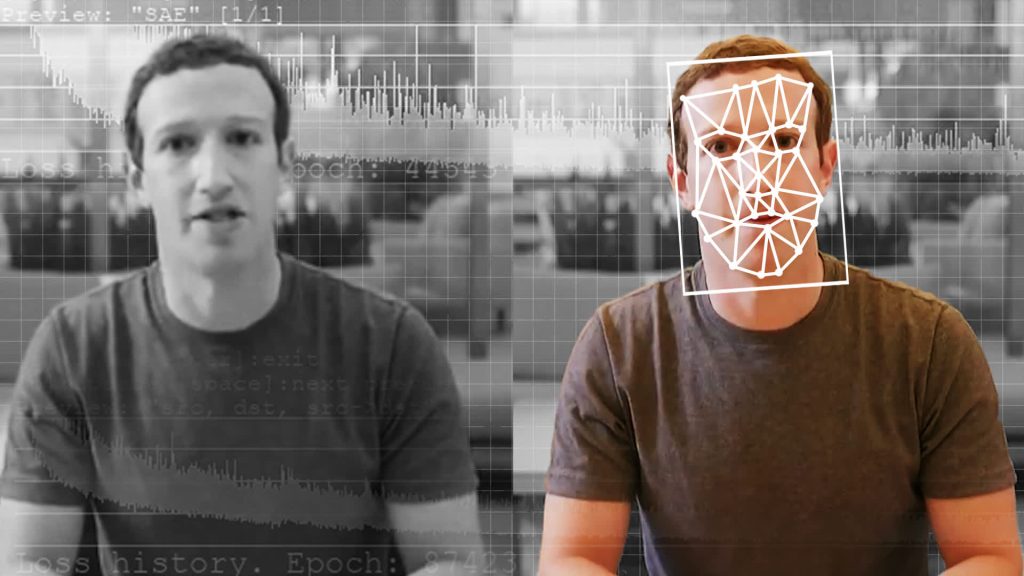

Apocalyptic warnings about the risks of AI are hardly new. Stephen Hawking warned that “the development of full AI could spell the end of the human race”(1) and Elon Musk has declared unregulated AI more dangerous than nuclear weapons(2). While computers aren’t out-thinking humans just yet, we’re already confronting the first challenge of the AI-age – the rise of “deepfakes”, exploiting AI techniques to undermine trust and threaten our ability to know what is real.

The challenge of deepfakes

A deepfake refers to the use of machine learning algorithms to generate fake images or videos that are difficult to distinguish from reality. Two years ago, the term was largely associated with fake nudes of celebrities. This is bad enough, but in our charged political climate, rife with accusations of “fake news” and social media manipulation, the risks have become more dire. The problem of deepfakes has grown beyond simple face-swapping and video manipulation. Fake images and videos can now be created entirely from scratch (see https://thispersondoesnotexist.com/) with only modest investments in tools and computing capacity.

A bigger problem than just videos

AI-powered systems can be used to generate fake text, online experiences, and even phone conversations. To raise public awareness of the dangers posed by deepfakes, OpenAI, a non-profit dedicated to safe and beneficial AI, released GPT-2(3) in February of 2019, an AI-powered algorithm able to write text and articles. OpenAI released an intentionally hobbled version of their model trained with a small dataset because they viewed the technology as dangerous. Prompted with a sentence or two, GPT-2 can expound on any topic, writing paragraphs of text that most readers would assume were written by a human.

The impacts of AI-generated writing are profound. From social media posts to chatbots to e-mail correspondence, it is becoming harder to recognize computer-generated content. By some estimates, up to 30% of product reviews on Amazon are now fake(4) posing serious brand-related risks to suppliers, retailers, and online providers.

AI generated text coupled with fake images, video, and voice synthesis dramatically lowers the cost of spreading mischief. Aside from spreading chaos in our politics, we can expect a new wave of cybersecurity threats such as digital forgery, identity theft enabled by hacked digital voices, and even law enforcement challenges – “Proof beyond a reasonable doubt” will become a much higher threshold as the public becomes increasingly mistrustful of all digital media.

A cat and mouse game

We’re in the midst of an escalating battle between AI-based image/video/audio/text generators and AI-based detectors. This cat and mouse game is similar to the offense/defense dynamic that plays out with counterfeit currencies, bacteria and antibiotics, and malicious software vs. anti-virus platforms.

Generative Adversarial Networks (GANs) are a relatively new concept in machine learning. A deep learning model might be trained to generate fake images based on a training dataset. The generator can then be coupled with a discriminator; a separate deep learning model trained to predict whether images created by the generator are real or fake. These opposing models (GANs) learn from one another, such that both become increasingly sophisticated over time.

Not all GANs are evil – read the IBM Research Blog GANS for Good

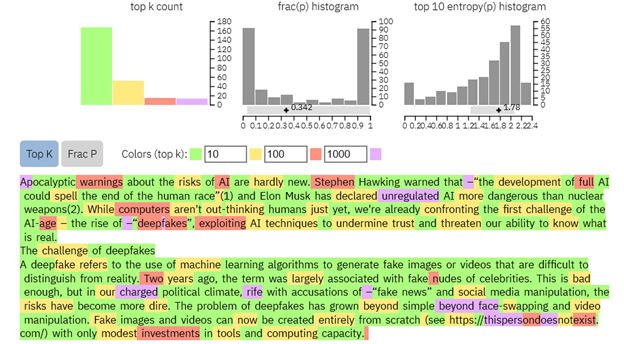

To counter threats such as AI generated text, researchers at the MIT-IBM Watson lab and AI HarvardNLP introduced the Giant Language Test Room(5) (GLTR), deep learning models designed to detect fake text generated by models such as OpenAI’s GPT-2. You can try the GLTR fake text detector yourself at http://gltr.io/dist/index.html

As firms “up their game” detecting fake video and text, attackers need to fool not only humans but AI-based deepfake detection algorithms as well. Business guarding against fraud are deploying ensembles of detection algorithms, but if the detectors are known in advance, adversaries can train their models to defeat detection. In this area, open-source models can be a bad thing. IBM and others are understandably “tight-lipped” about state-of-the-art detectors. Customers can employ solutions, including IBM Watson Studio, to develop high-quality models to guard against a wide variety of deepfakes and cyber threats. IBM Watson Machine Learning Accelerator helps organizations deploy, manage, and maintain these models in production and respond quickly to shifting threats.

Human limitations throw fuel on the fire

Distinguishing between legitimate and fake content is more than just a technology problem. There are deeper issues at play, including human bias and media literacy. As humans, we have “cognitive glitches” that can be exploited by clever adversaries. A good example is confirmation bias, where we tend to seek out and trust information that confirms our prior beliefs. Human neural networks may prove harder to retrain than their silicon counterparts. Even the best fake detector can’t necessarily overrule an audience predisposed to believe misinformation.

Quality and veracity of training data is another issue in combating AI-driven misinformation. In several well-publicized examples, facial recognition systems used by police have proven controversial by disproportionately misidentifying faces of minorities(6) – a consequence of training datasets over-represented by white males. This is a rather shocking human oversight if we think about it. IBM is active here also providing the Diversity in Faces (DiF) dataset to help organizations develop facial recognition that is more fair and free of general and racial bias.

Pure research in understanding context, and how we, as humans think, will help us build more capable algorithms over time. Efforts such as IBM’s Project Debater, a follow-on to earlier efforts such as IBM Deep Blue and IBM Watson (the reigning Jeopardy champ) will provide new insights into how humans think and process information, and provide new tools to help combat deepfakes and fraud.

So, who actually wrote this article?

After learning about deepfakes, you could be forgiven for asking – was this article written by Gordon Sissons or a predictive model similar to GPT-2 impersonating Gord?

Running this article content through the GLTR model, it exhibits the “Christmas tree-like” characteristics of material written by humans with plenty of red, yellow and purple, and an uncertainty histogram skewed to the right, suggesting that the article probably wasn’t generated by an AI. What’s worrisome, however, is that sophisticated fake text generators are starting to exhibiting these same characteristics – even now, we cannot be completely sure whether this article is real or fake.

- Stephen Hawking on Artificial Intelligence – BBC, 2014: https://www.bbc.com/news/technology-30290540

- Elon Musk, SXSW, March 2018 – https://www.cnbc.com/2018/03/13/elon-musk-at-sxsw-a-i-is-more-dangerous-than-nuclear-weapons.html

- Source-code available for GPT-2 illustrates how easy it is to generate fake text – https://github.com/openai/gpt-2

- CBS New Feb 2019 – Buyer Beware – the scourge of fake reviews gitting Amazon, Walmart and other major retailers – https://www.cbsnews.com/news/buyer-beware-a-scourge-of-fake-online-reviews-is-hitting-amazon-walmart-and-other-major-retailers/

- Catching a Unicorn with GLTR: a tool to detect computer generated text – http://gltr.io/

- The problem with AI? Study says it’s too white and male, calls for more women, minorities – https://www.usatoday.com/story/tech/2019/04/17/ai-too-white-male-more-women-minorities-needed-facial-recognition/3451932002/